-

Note

Codestory Podcast: Why We Started Arcade

TalkPodcastArcadeFounder

-

Note

This New Way Podcast: AI Agents Run Your Inbox, Calendar & Socials

TalkPodcastAgentsArcade

-

Note

What is MCP? LLM Tools and Where We Are Going with Agents

TalkMCPAgentsToolsSecurity

-

Post

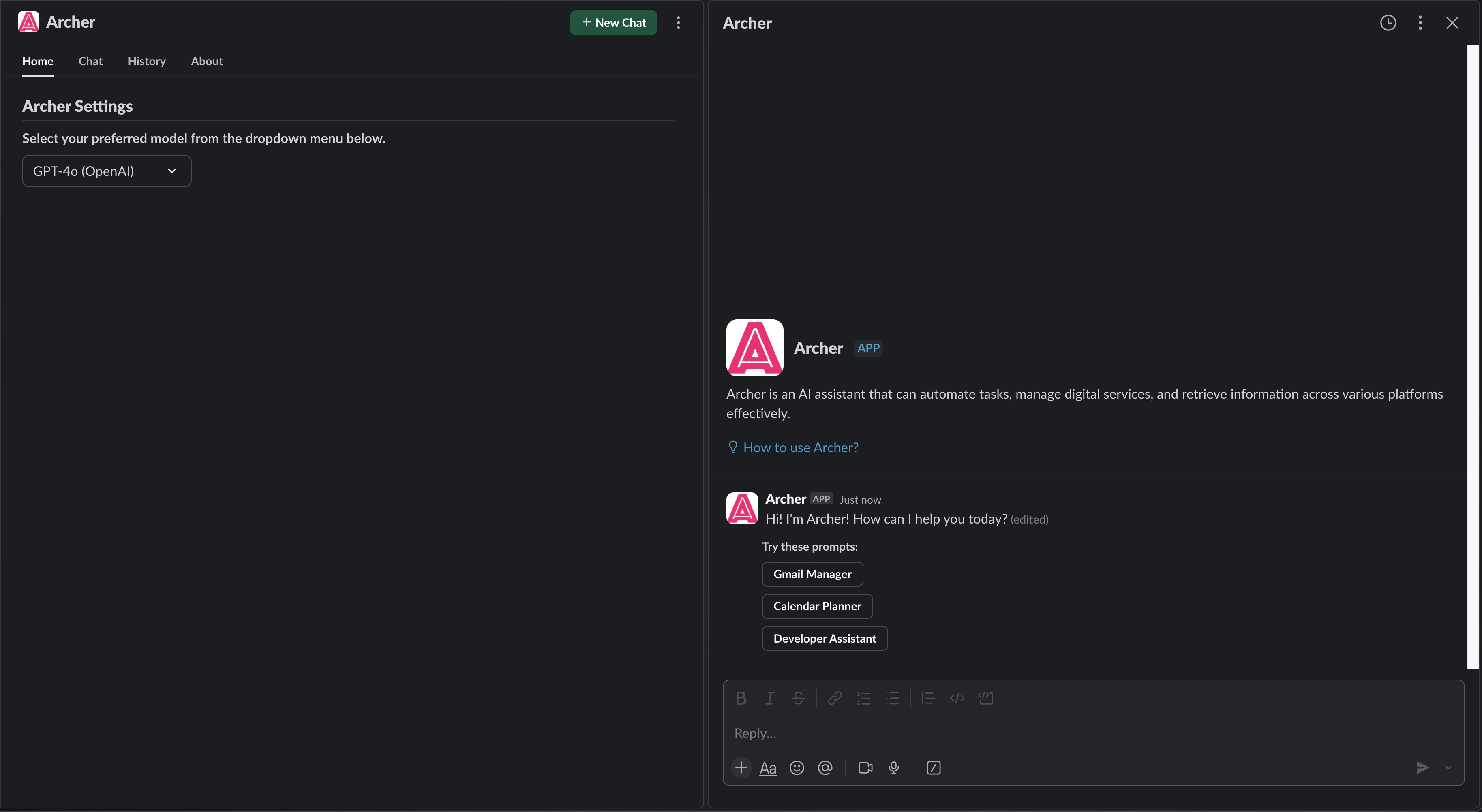

Archer: The Agentic Slack Agent

ArcadeDeveloperSlackAgentlanggraphmodal

-

Note

AI Agents: How to Make Them Useful

TalkAgentsProductivity

-

Post

Agents in Production - Agentic Tool Calling

ArcadeTalkLLMAgentTool-calling

-

Note

Llama 3.2 Tool Calling

LLMTool-CallingLLama

-

Note

Lambda Pricing

ServerlessDeveloperAWS

-

Post

So I'm starting a company: Arcade.dev

ArcadeDeveloperArcade AI

-

Post

How to Build a Distributed Inference Cache with NVIDIA Triton and Redis

ArticleRedisNVIDIACache

-

Post

The Generative AI Application Stack: LLMs, Integrators, and Vector Databases

TalkGenerativeAILLMStartupsPanel

-

Post

Vector Databases and Large Language Models - Part 2

TalkVector-similarityLLMRedis

-

Post

MLLearners: Large Language Models and Vector Databases

TalkVector-similarityLLMRedis

-

Note

Slides LLM in Production Talk: Vector Databases and Large Language Models

TalkVector-similarityLLMRedis

-

Post

Vector Databases and Large Language Models

TalkVector-similarityLLMRedis

-

Note

Slides for NVIDIA GTC Talk: Improving Data Systems for Merlin and Triton

TalkVector-similarityFeature-StoreRecommendation-systemsRedis

-

Post

Vector Similarity Search Panel

TalkVector-similarityRedis

-

Post

Real-time Recommendation Systems with Redis and NVIDIA Merlin

RedisRecommendation-SystemsFeature-StoreAIVector-similarity

-

Post

Python Libraries You Might Not Know: CIBuildWheel

PLYMNKDeveloperPython

-

Note

Brief Primer on Parallel Programming

PythonHPCDeveloper