Nvidia released their new RTX 30 series and the top of the line consumer graphics cards sold out in a matter of seconds. Nvidia stock is at an all time high. Demand for Graphic Processing Units (GPU) keeps growing for specialized computations in gaming and AI development. The latest wave of AI has been spurred on by the rapid development of GPU compute capability. A100/V100s with High Bandwidth Memory (HBM) stuffed into a Cray, DGX, and systems like it, power many of the foremost Deep Learning research groups and labs. Does this mean that to practice Deep Learning that you need the latest and greatest GPU? What should you do if you want to break into Deep Learning and not the bank?

This article will detail various places to access GPU compute and how I made an old linux machine into a decent system for learning Deep Learning.

Recently, I have been asked an unusual amount about how to get started in Deep Learning. People from many backgrounds - student, software engineer, climate scientist, designer - have inquired as to the best way to get started. One common note from each person has been something along the lines of

“I don’t have access to GPU compute nor money for cloud resources. Don’t you need a GPU? What do I do?”

Get creative! You don’t have to have stacks of cash to spend on expensive GPUs that can cost thousands of dollars. Buying the latest and greatest GPU isn’t the only option.

It is important to address the fact that I haven’t mentioned any prerequisite for getting started in Deep Learning besides owning a GPU. Hopefully, it is obvious that if you don’t know anything about Deep Learning, a graphics card is not going to magically inject that information into your brain. I spare myself the digression into what you should do now if you don’t know anything about the field, but this book is a pretty decent start, it suggests solid further reading, and it is free.

Many students, data scientists, software engineers, and even some machine learning practitioners may not even need a GPU at first, whether it’s their own or rented. GPUs are not always the best choice for a given task. CPU and other specialized processors like Tensor Processing Units (TPU) each have advantages.1

In my experience, many datasets, especially tabular ones, can be consumed by a XGBoost model trained on a MacBook from 5 years ago. The same can be said about many machine learning models and datasets, however, the same is not necessarily true of DL.

Access to GPU compute can significantly decrease training times of DL models allowing for faster iteration. Some DL sub-fields like Natural Language Processing (NLP) pretty much require GPU compute because of the sheer amount of data required to train models. Because of this, GPUs are the de facto device when training many types of DL models.

Rent the System ($0 - $inf)

Cloud platforms are the easiest way to get started by far. There are multiple places where you can get deep learning resources on demand.

A word of warning to those choosing this route: This can be crazily expensive. I left an instance on one time and it ended up costing me more than the GPU I bought for this article. This is the biggest downside of using cloud - you don’t own it.

Kaggle

Kaggle is a website for machine learning competitions. Kaggle provides its users with free GPU and CPU support through its notebooks platform. The competitions are pretty fun but the submission system can take some getting used to. Overall, Kaggle is a great place to learn because of the number of available resources that people post mostly in pursuit of virtual medals.2

Kaggle is probably the easiest way to get started if you are a complete beginner and have no idea how to build or improve a system for DL.

Just go get an account, open your first notebook and you’re instantly a seasoned deep learning engineer with years of wisdom and knowledge. It’s as easy as that.

Google Cloud

Access to GPU and TPU? Cool. Google Collab is very similar to Kaggle. Hosted notebooks with the ability to access a TPU or GPU. Personally, I use Amazon because I find it easier, so I will just point you to this article which does a good job of describing how to get started with Google Cloud.

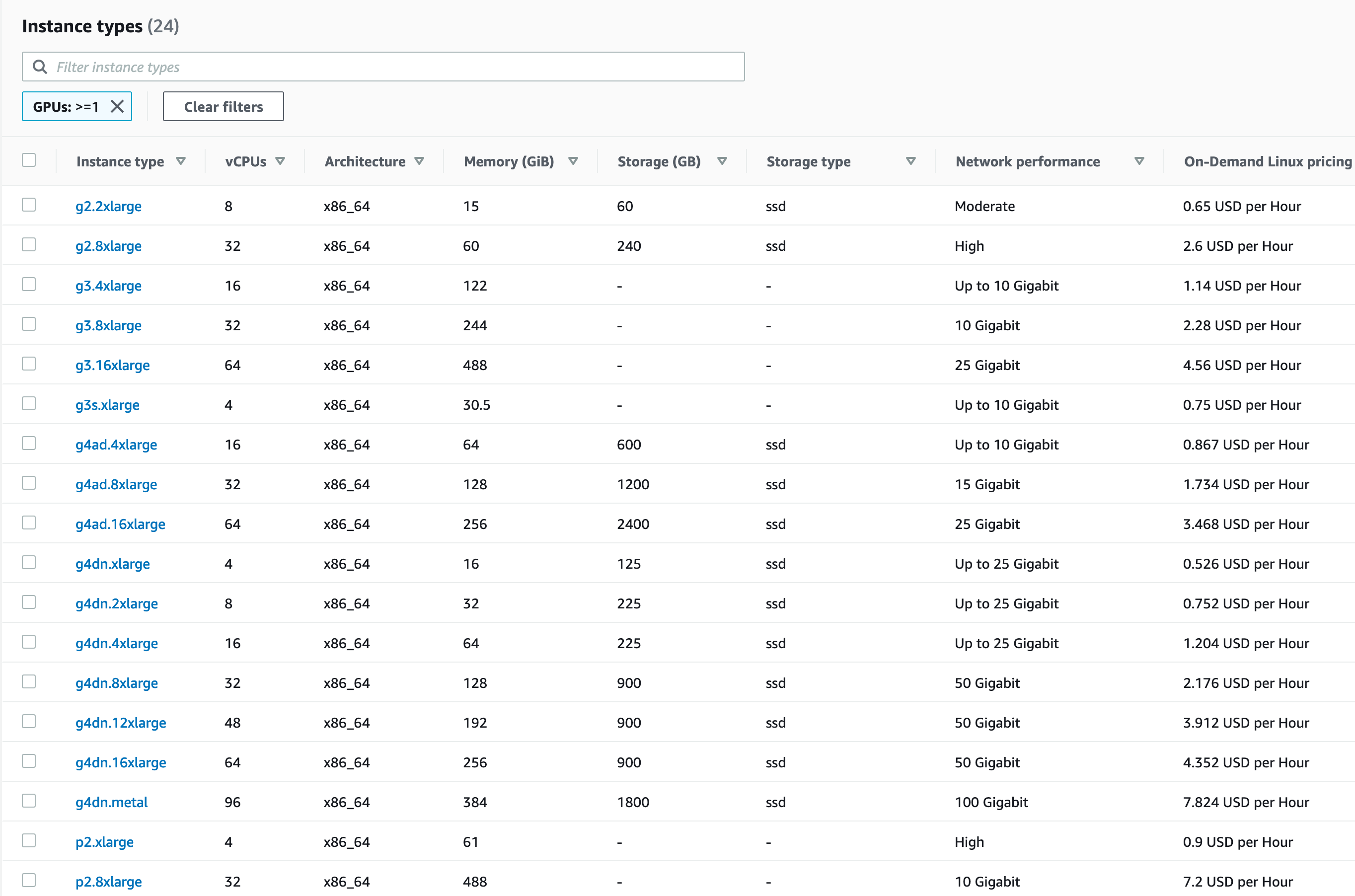

Amazon

The AWS list of services seems to grow by the second. Amazon’s EC2 service is an easy way to get a dedicated machine with GPU compute. The image below shows EC2 server instances with GPU compute.

With an AWS account 3, you can pick any one of these and spin them up in a matter of minutes. Connecting to the system through ssh is quick and works with editors that have remote development support like VSCode.

Buy a New System ($700 - $100M)

If you have the money and you’re willing to spend it, buying a new system with GPU compute is the easiest way to get you or your company a DL-ready system that you don’t have to rent. For the average Joe starting out, the cheapest possible system that will be practical for DL is about 700 dollars. 4

$700 as a starting point might seem expensive to some, but the price is due to the type of GPU required for DL. Honestly, it’s fairly cheap in terms of the cost of today’s GPU market. Most daily practitioners are lucky if they can get by with a GPU that costs 700 dollars by itself.

The GPUs required for training DL models should at least have 4-8Gb of memory (Some will say even 4-6Gb is not enough). Otherwise, the memory will be insufficient to train larger models used in areas like image classification, segmentation, or NLP.

For companies or large corporations that are looking to research cutting edge AI, top of the line cluster systems with thousands of nodes packed with 8+ GPUs each can cost as much as 100 million dollars. For those systems, I suggest HPE/Cray. I am obviously biased because I work for HPE-Cray, but the Cray EX with 40Gb A100s is frankly a downright beast. Somedays when I run nvidia-smi and see 1000 nodes each with 4 A100 GPUs with 40Gb of GPU memory, I feel very lucky/privileged. Speaking of which, I’m hiring!5

For everyone between average Joe and large corporation, here are some places to buy a ready-to-go DL box.

Lambda

Lambda has some really interesting options for enterprise as well as your average user. For the price of a MacBook Pro, you can get a laptop 6 with a NVIDIA RTX 2080 Super Max-Q GPU that packs 3072 cuda cores and 8Gb of memory. As a bonus it comes pre-loaded with some of the most popular deep learning libraries.

Lambda also sells servers, clusters, workstations and more. Personally I don’t believe I will ever go the pre-built route because I like choosing the hardware and building myself. However, I have to admit, Lambda seems to have done a pretty good job in choosing their hardware.

Newegg

Newegg has some pre-built and refurbished machines as well as the ability to customize and get a read out of all the parts to buy. I love Newegg and buy there whenever possible.

Unfortunately this option can, and usually does, cost thousands of dollars. Since this article is geared towards readers with less financial freedom, I won’t go much deeper on brand new systems. If hardware scares you and you have the money - stop reading here - go buy a stack of 3090s (if you can find some) - and Venmo me when you get a chance.

Get a Used System ($300 - $2000)

The most underrated option in my opinion. Buying a used machine with a slightly outdated GPU is a great way to save money yet still be able to grow your deep learning skillset. However, I would warn against buying any used system that costs more than $2000 that hasn’t been refurbished by a licensed outlet like Newegg.

For this option, just look on Ebay and Craigslist in your area. Often times there are incredible deals right after the newest line of Nvidia GPUs come out.

See the following section on building it yourself to ensure that the machine you get will live up to your expectations.

Build a System ($400 - $10000)

Building a deep learning box yourself is the best way to get the exact system you want. There are a few resources that are very helpful in building your own system.

- PCPartPicker: Make sure all your components are compatible

- Newegg PC Builder: Newegg’s service to help you select components

- Tim Dettmers: Two great blogs on hardware for DL and which GPUs to buy

You can build a fairly cheap system from scratch, but the kicker is the GPU. A mix of buying used and new components is a great way to save money. The average is around $15007 for a decent system with a good, but not great GPU.

Upgrade your System ($50 - $600)

Upgrading/adding a GPU to an existing system can be one of easiest, cost-effective options to get access to the hardware you need for DL. Some DL ready GPUs can be found on sites like craigslist for as little as $100.

To upgrade or add a new GPU into your system, you need to make sure it is compatible with your existing hardware. Plugging each component of your system into PCPartPicker will ensure that everything will work as expected. Overall, there are a few strong requirements your system must have to be able to utilize a new GPU.

Base level system requirements

- A PCIe x16 slot in your motherboard (could be where your old GPU is located)

- An adequate power supply.

- A big enough box (some GPUs are quite beefy)

Depending on the GPU you get, your system might require more than this. This route is the best for those who have a good system that is not necessarily suited for DL. If you are spending more than $600 on a GPU for an existing system, I recommend buying a whole new system to go along with it.

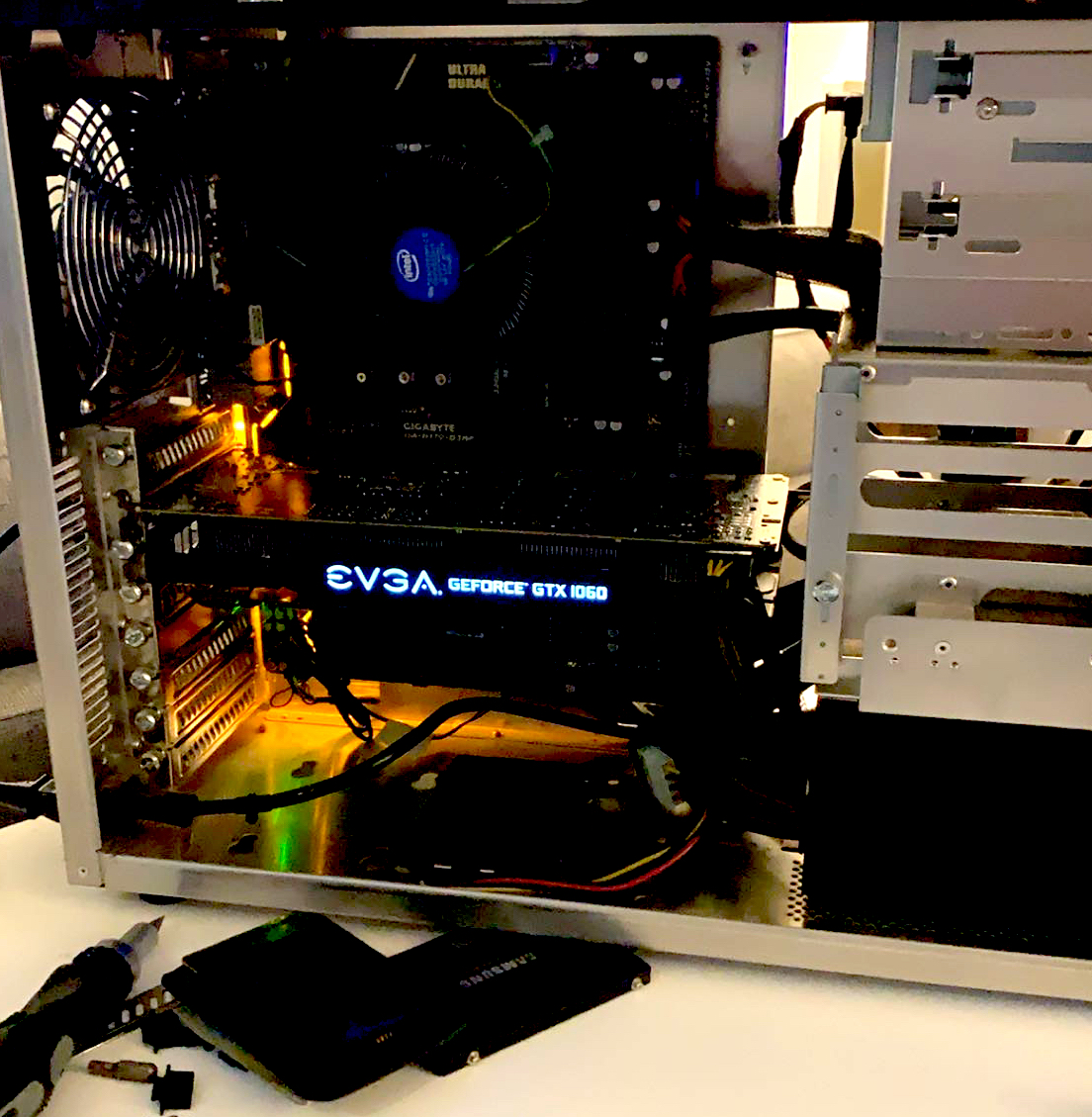

Upgrading the GPU in my Old Linux Machine

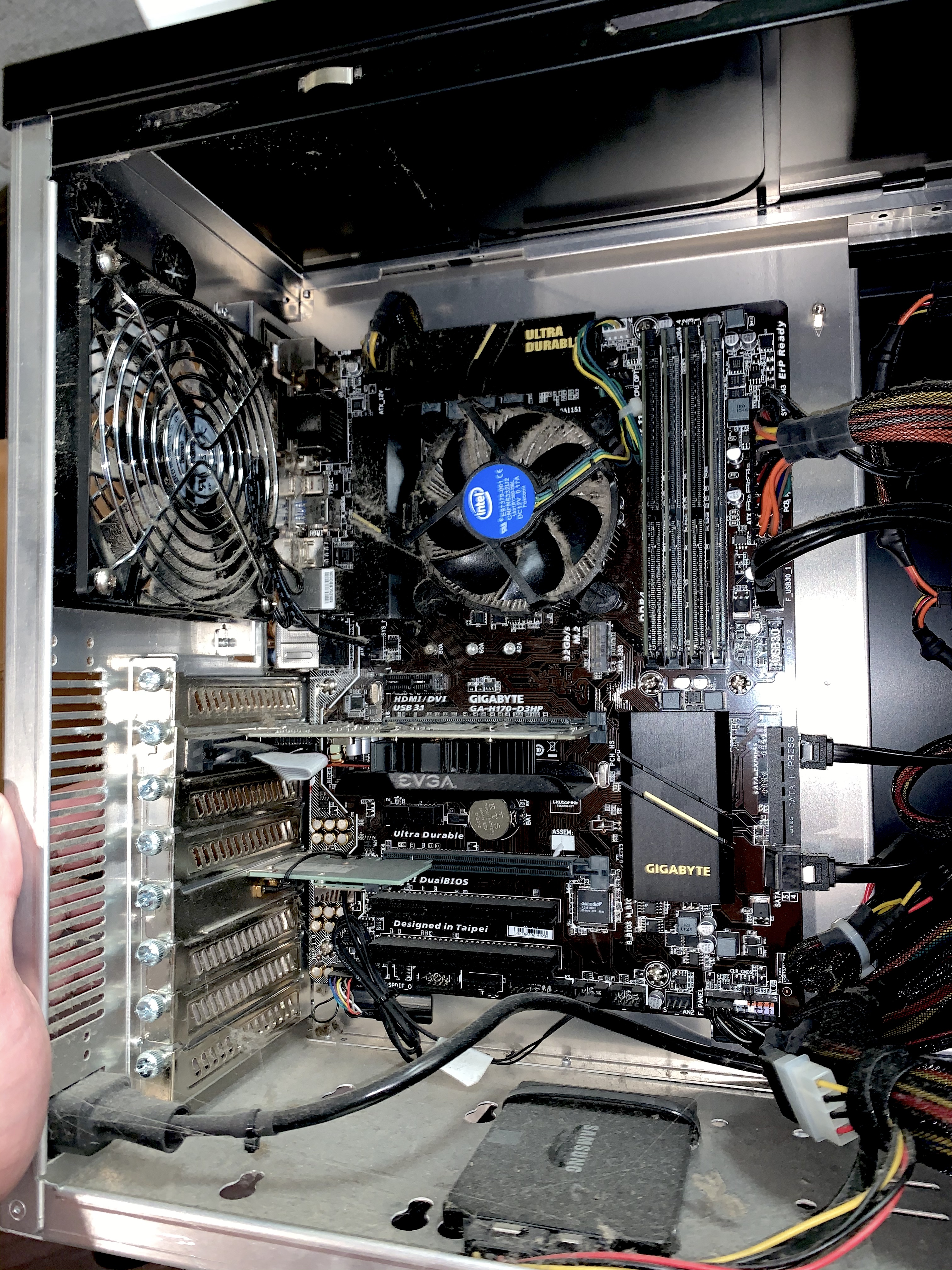

Since upgrading an existing system can be tricky, I figured I would upgrade one of my system to write about for this article.

Here is the system that I started with:

Before the upgrade, this system had a EVGA Geforce GT 730 with 1Gb of memory, a 4-core Intel I5 and 32Gb of DDR4 memory. Despite being a solid system for general software development, this system was incapable of training large DL models because of the size of the GPU memory (1Gb).

Another point was that the Nvidia GPU architecture of the 730 (Kepler maybe?) wasn’t supported by current versions of CUDA, Nvidia’s secret sauce (Low level GPU library) that keeps DL on Nvidia chips.8 Be careful when buying used or older GPUs, make sure they are actually usable for DL (compatible with the libraries you want to use) before buying one.

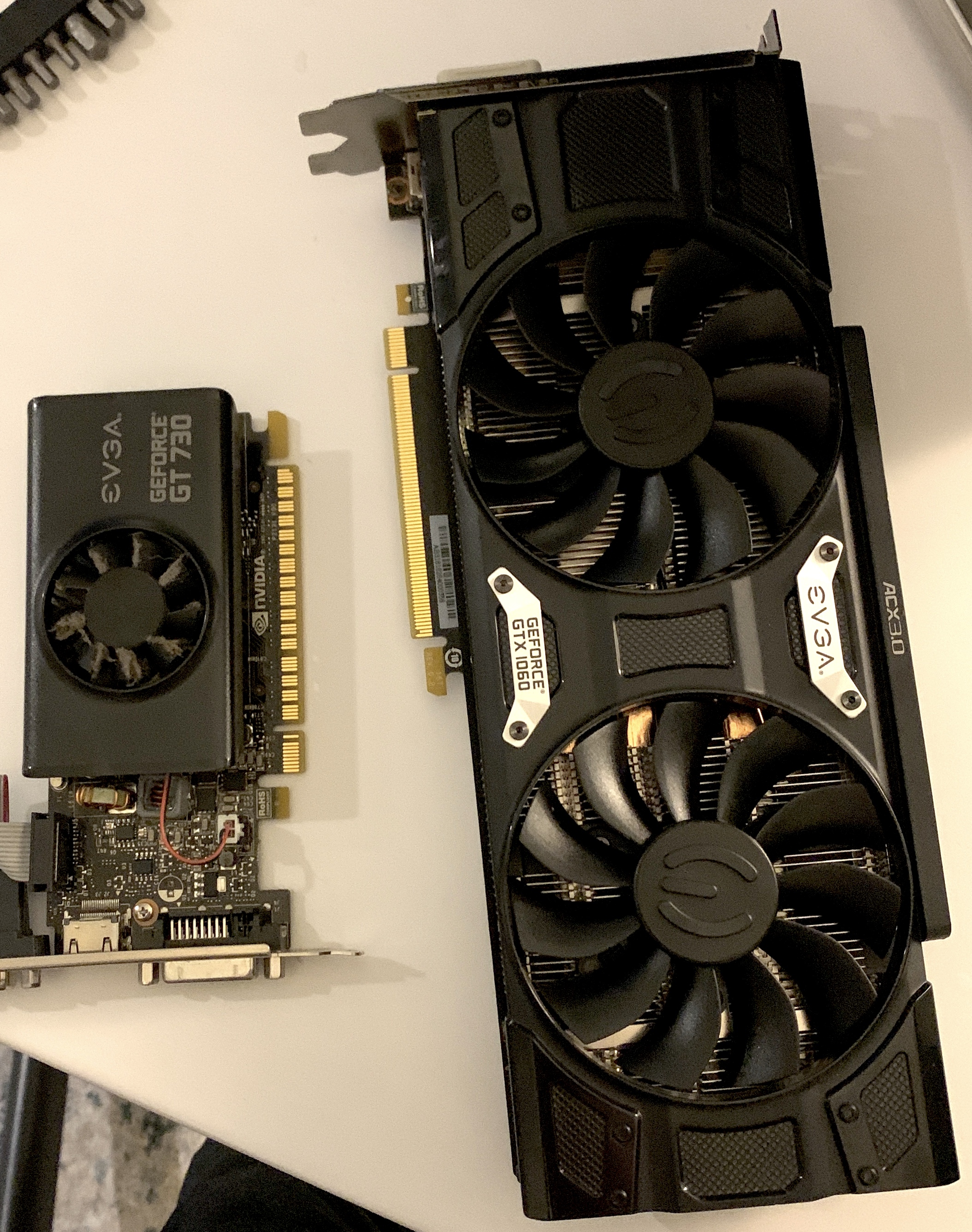

Find a GPU

As mentioned previously, finding a previous generation GPU on craigslist is often a good way to save money on a GPU that can train DL models. There are always people looking to get rid of the last generation of GPU.

For the purposes of writing this article, I kept my budget for purchasing a GPU to under $150. I searched Craigslist around my area to find GPUs that would fit my build.

I emailed about 4 people before one responded that had a EVGA Geforce 1060 with 6Gb of memory available for $200 dollars. After researching the GPU, I found that this was a fair price for the card. Despite this, I offered $75 dollars. We eventually settled on $100 for the GPU, which was a steal. When I met the seller of the GPU, he pulled up in a bright red Tesla - e.g. I don’t think he minded getting a hundred knocked off his asking price.

And just to be sure you didn’t miss it. Tim Dettmers has a great blog article on choosing a GPU for deep learning here.

Ensure the GPU will work

When looking for a GPU, make sure to plug your system components (motherboard, memory, power supply, etc) into PCPartPicker to ensure that your system will support the new GPU. Usually this comes down to the three base level requirements mentioned earlier (PCIe x16 slot, power supply, housing).

Hardware Install

If you did the last step right and got a GPU that works with your system, then this can be as easy as just plugging it in. Often, nicer GPUs have specific requirements (for power or cooling) that must be installed.

For installing your GPU, I recommend finding the manual for your motherboard and the GPU itself. For me that was the following:

These will help guide you through any pieces of the hardware install that may be tricky.

Once the hardware is installed, you will have to install the drivers for your GPU. Since this differs for each operating system, I won’t detail it here. Comment if you want to hear about how I did this!

Conclusion

Thanks for reading! If you liked it, please share. If you didn’t, I can’t believe you made it this far!

Also, if you would like me to deep dive on any part, please comment and I’ll put it in the backlog!

-

A paper comparing TPU, GPU, and CPU for Deep Learning and a slightly more consumable medium article about the same topic ↩

-

Yes, Kaggle will award you medals for posting notebooks, participating in discussions, posting datasets, and of course, competitions. If you need some positive reinforcement along with your GPU access to learn a new field - look no further than Kaggle. ↩

-

Fair warning: Do not leave these instances on for long periods of time. It’s almost too easy to accidentally leave them on. ↩

-

I am basing the minimum price of $700 off of prebuilt/refurbished systems on newegg that have GPUs with at least 4GB of GPU memory. A NVIDIA GTX 1050ti is about the least-capable GPU that still makes sense to purchase for learning DL if you want to own your own hardware. Below this capability of GPU compute, I personally recommend the cloud approaches listed in the article. ↩

-

Here is a tweet about it. Feel free to DM me on twitter if you’re interested! ↩

-

The laptop is called the TensorBook. I don’t know anyone who has it, but it certainly has the specification to be a good laptop for DL. ↩

-

Based on pre-built systems available on newegg that have a NVIDIA RTX 2060 which, at the time of writing this article, I consider to be a middle of the pack GPU. ↩

-

On the state of Deep Learning outside CUDA’s walled garden ↩

Comments