Recently, a friend sent me a Wired article entitled “The Power and Paradox of Bad Software”. The short piece, written by Paul Ford, discusses the idea that the software industry might be too obsessed with creating better and better tools for itself while neglecting mundane software such as resource scheduling systems or online library catalogs. The author claims that the winners of the bad software lottery are the computational scientists that develop our climate models. Since climate change might be one of the biggest problems for the next generation, some might find it a bit worrying if one of our best tools for examining climate change was written with “bad software”.

In this post, I discuss the question of wether climate scientists lost the “bad software sweepstakes”. I’ll cover the basics of climate models, what software is commonly used in climate modeling and why, and what alternative software exists.

In the aforementioned article, Paul Ford states:

Best I can tell, the bad software sweepstakes has been won (or lost) by climate change folks. One night I decided to go see what climate models actually are. Turns out they’re often massive batch jobs that run on supercomputers and spit out numbers. No buttons to click, no spinny globes or toggle switches. They’re artifacts from the deep, mainframe world of computing. When you hear about a climate model predicting awful Earth stuff, they’re talking about hundreds of Fortran files, with comments at the top like “The subroutines in this file determine the potential temperature at which seawater freezes.” They’re not meant to be run by any random nerd on a home computer.

I’d first like to mention that I am that random nerd who ran climate models in their college dorm room on a small linux computer. Personal anecdotes aside, software developers, even in HPC, might not be familiar with climate modeling. The field is somewhat niche, so first I’ll explain the basics of climate models.

So what are Climate Models?

Model is a bit of a catch-all. The easier way to think about is as a piece of software. This isn’t the type of software that populates your twitter feed or plays your favorite track on Spotify. Putting it simply, climate models try to simulate the world. The simulations usually start at some preindustrial control state (1850~1900) and iteratively step forward in time. At each discrete step in time, physical quantities of interest are calculated and the state of the model is updated.

Climate models like the Community Earth System Model (CESM) are made of up multiple models each responsible for their own part of the world: the ocean, atmosphere, ice, rivers, etc.1 Each of these various components communicate with each other to update the state of the global climate. This is why climate models are often referred to as “coupled climate models”.

Climate models often work on massive grids. There are many types of these grids, but for simplicity sake just think of a piece of graph paper. Each little square of the grid is responsible for a section of the world’s climate being modeled.

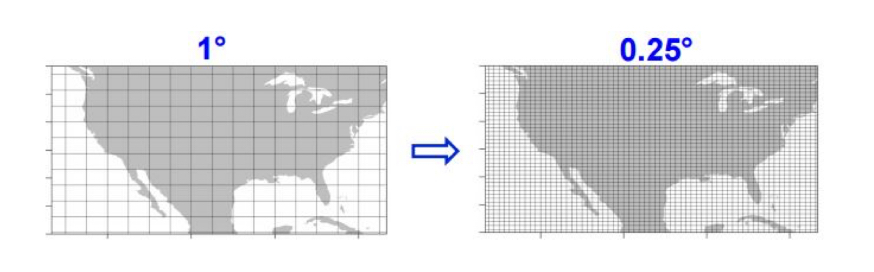

Model grids can be made finer or courser in resolution. Going back to the example of the graph paper, increasing the resolution equates to dividing up each square of the paper into a number of other squares. The picture below shows two grids sizes (1 degree and 1/4 degree) that are commonly used in climate modeling.2

Climate models are a bit like cameras - the higher the resolution, the more detail you can see in the picture. Generally, the finer the resolution, the more scientifically useful the output of the simulation.

Finer resolution models require more compute power - the more grid cells, the more quantities to calculate. Scientists need high resolution models because they are the most adept at modeling small scale processes that, in aggregate, make a large impact on the predictions of the model.

Not only do these models require computational power, they also require massive storage capacity. As the resolution is increased, the output goes from gigabytes to hundreds of terabytes very quickly. For example, the aforementioned CESM model when run for 55 model years, with a 1/4 degree grid in the atmosphere (as show on the right in the above picture) and 1/10 degree grid in the ocean, produces over 140 terabytes of output data.

This is all to say that climate models are a different beast than most developers are even aware of. They push the frontier of what is possible to compute and store. Because of this, climate models require software that many other applications do not.

Fortran

The ocean model mentioned in the article, Modular Ocean Model 6 (MOM6), will soon be the new ocean component for CESM. That “new”3 piece of software is written in Fortran.

If you know what Fortran is, and you’re not familiar climate modeling, you might be pretty shocked to hear that. Why? As far as technology goes - Fortran is old. Fortran was first written in the late 1950s! Back then, Fortran was still programmed on punch cards.

Obviously, Fortran has come a long way since the punch card. Many iterations of Fortran have been developed since its inception that introduced modern programming concepts such as object oriented programming and interoperability with other languages. However, this still doesn’t address the fact that many new languages have been developed since those early days of computing. So why Fortran still? Is it really still the best tool, or was Paul Ford right that climate scientists have lost the “bad software sweepstakes”? One of the first ways we can examine this question is to look at how many models are actually using Fortran.

How many climate models are written in Fortran?

The best place to look for climate models (and their data) is the Coupled Model Intercomparison Project (CMIP). This is a massive repository of climate model data that is stored on servers around the world with the goal of comparing the skill of climate models created by climate modeling centers.

Now, I haven’t looked up every single model in this list, but some of the more well known ones (in the U.S.) are:

These models are written by some of the major climate modeling centers in the U.S. (NOAA/GFDL, NCAR, etc) and they are all written in Fortran.

Keep in mind that this usually means that their components models are written in Fortran as well. For example, some well known component models are

- Community Atmospheric Model

- Modular Ocean Model 6 (mentioned in the article)

The answer to our question of “how many models are actually using Fortran?” is - Most, if not all. However, just because all the cool kids are doing it, doesn’t mean its the right choice. So why Fortran?

So why Fortran for our climate models?

It is true that Fortran can scare off newly minted developers and even some seasoned developers. Fortran is not an easy language to write or read by any standards.

However, Fortran is actually very good at what it does. Fortran stands for Formula Translation. The language was designed to turn math equations on paper into code in a computer. Fortran stands in stark contrast to more modern languages like Python that practically read like English.

Fibonacci in Fortran 90

module fibonacci_module

implicit none

contains

recursive function fibonacci(n) result (fib)

integer, intent(in) :: n

integer :: fib

if (n < 2) then

fib = n

else

fib = fibonacci(n - 1) + fibonacci(n - 2)

endif

end function fibonacci

end module fibonacci_module

Fibonacci in Python3

def fib(n):

if n == 1:

return 0

elif n == 2:

return 1

else:

return fib(n-1)+fib(n-2)

In fact, if you like Python, you might be surprised to hear that some of your favorite Python libraries, like Scipy, are partially written in Fortran. If you’re from the R camp, a language primarily used in data science, you also might be surprised to hear that over 20% of R is written in Fortran. 4

But still, why would the brains behind the worlds foremost climate models pick Fortran over <insert your favorite language>?

Performance. That Ricky Bobby type speed.

Fortran is a bit like Nascar - don’t worry about right turns (readability), focus on speed, and declining in terms of popularity. Modern versions of Fortran5 have made the language much easier to work with, but the main goal of Fortran has always been speed of numerical calculation.

Other languages use Fortran to speed up numerical calculations by calling out to it. This article on R-Bloggers shows how Fortran (95) compares to C and C++ when used inside of R. If you’re curious about Fortran performance, check out the Computer Language Benchmarks game for comparison between Fortran and other languages.

Scientists who build climate models care a lot about performance. A small increase in performance can lead to hours of compute time saved. Think about this: If you had programs that needed to run for weeks on expensive hardware, wouldn’t you invest time in shorting that waiting period? Performance is the most important factor to many climate scientists when choosing between languages.6

Design

Arrays are a first-class citizen in Fortran. A higher level abstraction for arrays, compared to C, makes it easier for scientists to describe their domain. Fortran supports multi-dimensional arrays, slicing, reduction, reshaping, and many optimizations for array based calculations like vectorization.

Because of this abstraction for arrays, Fortran is uniquely suited for scientific numerical calculation. The way I like to explain this to non-fortran programmers who are familiar with C++ is that arrays are to Fortran what std containers are to C++: a convenient abstraction that makes working with groups of numerical values easier. Now, I’ll admit that it’s not the best analogy, but the point I’m trying to drive home is the convenience gained from the abstraction.

Longevity

Longevity of a codebase is very important to that climate modeling community. Scientists cannot write millions of lines of code in a language that may soon be deprecated or changed dramatically. Fortran, throughout its many iterations, has incredible longevity. Modules written in older versions of Fortran are often compatible with newer versions of Fortran used today. For the most part, once you can get it compiled, Fortran “just works”. This is important for scientists who want to focus on the science and not keeping up with the latest language.

Bill Long is a Fortran wiz who has been on the Fortran standards committee for 20+ years. He has personally helped me with a Fortran implementation at work because he is equally as nice as he is good at programming in Fortran. Bill captures the longevity of Fortran well in a response to the question I found in a post: “why is fortran extensively used in scientific computing?”

One of the features of Fortran is longevity. If you write the code in Fortran it will likely still work, and people will still be able to read it, decades from now. That is certainly the experience of codes written decades ago. In that time there will be multiple “fashionable” languages with ardent followers that will rise and eventually fade. (Anyone remember Pascal, or Ada?) If you want a fashionable language to learn today, you probably should be looking at Python… Or you can go with Fortran, which is arguably a sequence of new languages over the years, all of which have the same name. And are largely backward compatible. 7 Bill Long - 2014

Message Passing Interface (MPI)

Programs that need to spread their computation amongst many compute cores, like climate models, often use the Message Passing Interface (MPI) library. The MPI library can be seen as a gateway to using more computing power. Developers in High Performance Computing (HPC) know that MPI is god and all MPIs children were born in its image. One time I heard two HPC developers say “this is the way”, like the Mandalorian, in reference to their agreement to use MPI for a C++ project prototype.

At a high level, MPI defines a collection of methods built on passing messages that one can use to exploit massive amounts of compute power. Even the newest fields of computing, like Deep Learning, are still using MPI when they need their computation distributed on many CPUs or GPUs. For example, Horovod can use MPI to exchange model parameters between GPUs for distributed deep learning.

However, not all languages can use MPI. Even less can use MPI at the level of performance that Fortran does. Fortran + MPI is the foundation on which many climate models are written so they can scale to massive supercomputers. The need for a parallelism framework that is performant, like MPI, limits the number of languages that climate modelers can choose from. The language has to work well with MPI or supply a performant alternative, because modelers an MPI level of performance.

If you want to read more on the impact of MPI in HPC in general, I highly recommend Johnathan Dursi’s post on why “MPI is killing HPC”.

Effort

One of the reasons the climate modeling community hasn’t switched from Fortran is simply the sheer effort that it would take.

Learning a new language is time consuming. Many scientists do not come from a computer science background. Learning a new language without that background can be very difficult as the concepts learned in one language are not as easily transferred to a new language. Concepts like object oriented programming and polymorphism, considered staples of computer science, are not necessarily part of the climate science lexicon.

Legacy code is a real pain. Writing a model takes many developers decades to complete.8 Switching to a new language is a daunting task. Re-writing a codebase with over 9000 commits of Fortran is like tearing down and rebuilding a house. Re-writing a climate model is like rebuilding the entire neighborhood.

Connecting the Dots

Lets put together a checklist of language requirements for constructing a climate model

- Compatibility with MPI (or similar methods of parallelism)

- First class arrays and associated methods

- Stability and compatibility across language versions

- Extreme performance

- Designed for numerical computation

You see where this is going right? The language described by the requirements above is Fortran. While alternative choice exist, Fortran remains the dominant language for climate modeling. What other languages could scientists use though?

Alternative Choices

I would be remiss if I didn’t mention the other contenders that can be used to write a climate model, a few of which I’ll discuss here.

- Chapel

- Julia

- Python

Honorable Mention

- C++

- C

Chapel

Chapel is a parallel programming language developed by Cray (now HPE). Parallelism is a first class citizen in Chapel. The language exposes high level abstractions for distributed computations and focuses on trying to make those parallelism features accessible. Chapel is capable of performing scalable routines in significantly less lines of code than MPI + X (Fortran, C, C++) to accomplish similar computations.

The latest success story of Chapel is Arkouda. Essentially, Arkouda is an implementation of NumPy arrays written in Chapel. A Python front-end, that runs well in Jupyter, sends ZMQ messages to a Chapel backend server distributed out on a supercomputer or cluster. If you need to munge around some big data (hundreds of Gb to tens of Tb) then this project is worth checking out. It has some quirks but shows a ton of promise. 9

Chapel has some footholds in the simulation space with efforts like the CHapel Multi-Physics Simulation (CHAMPS) and in cosmological simulation, but no established models in the climate arena that I am aware of.

Chapel’s current shortcomings are compilation time, package ecosystem, and GPU support. These shortcomings, however, are being addressed. The second major release, that is coming soon, is a good time to look into using Chapel.

Julia

Julia is a relatively new language that has seen a lot of growth lately. Julia is a general purpose language that is suited for numerical computation and analysis. The language is dynamically typed, has a REPL, and interoperability with C and Fortran. The package manager integration into the REPL is pretty slick; It feels like the ! syntax in Jupyter notebooks I use to install pip packages on the fly.

Julia is growing and its use in climate modeling along with it. The Climate Modeling Alliance out of Cal Tech has been working on many climate-related Julia packages including ClimateMachine, a data driven earth system model.

I’ve heard second hand about some of Julia’s shortcomings, but I don’t have the experience with it to be able to articulate them well. For that reason, I’ll leave that as an exercise for the reader.

Python

If you have made it this far, you most likely already know about Python so I am not going to spend much time telling you how awesome it is, but rather the opposite.

Anyone who has tried to write distributed programs in Python will tell you, it’s not easy. Tools like Dask and PySpark can help mitigate some of the issues, but they are mostly used in different ways than the message passing paradigm that dominates climate modeling.

It is possible to use MPI in Python, but scaling it to the levels that Fortran, C++, and Chapel are capable of is nearly impossible if not completely impossible. For the skeptic developers who want to try this for themselves, go check out MPI4Py.

Python is used heavily in climate science, but not for the actual modeling itself for the aforementioned reasons. Mostly, Python is used for visualization and analysis on simulation output with tools like Xarray and Dask that are in the Pangeo data ecosystem.

Conclusion

In my opinion, Climate scientists did not lose the software sweepstakes, Fortran + MPI is actually very good at what it does: massive distributed numerical computation. However, more innovation is needed to make languages like Chapel and Julia the go-to alternative climate modeling so that, as these languages continue to mature and develop stable ecosystems, the climate modeling community can benefit from their enhancements over Fortran.

-

Image taken from a NCAR slide deck on CESM grids. EDIT: The grid illustrations from this deck are not exact. They were included to show difference in scale, but this should’ve been mentioned. Thanks to @DewiLeBars for pointing this out. ↩

-

MOM6 is nearly 10 years old according the the GitHub ↩

-

The 2003 version of Fortran was one of the biggest releases. The release brought OOP features like polymorphism, inheritance, as well as interoperability with C. ↩

-

I asked four climate scientists I am close with why they think their field picked Fortran for their models. The responses I got were all about performance of the language. My favorite response was “fast, that is all”. ↩

-

Long, Bill. (2014). Re: Why is fortran extensively used in scientific computing and not any other language? ↩

-

MOM6 has 9000+ commits, nearly 50 contributors, and that doesn’t even factor in to all the code that isn’t factored into the MOM6 Github contributions metrics ↩

-

Full disclaimer, I’ve contributed to both Chapel and Arkouda. Chapel and their awesome, incredibly kind dev team was the reason I first got into HPC. ↩

Comments